With the rise of open-source, open-weight models and concerns around data sovereignty and data privacy, a lot of people have started to construct and host their own platforms and their own LLMs for their own uses. With open-weight, open-source models, there is much greater control over the LLM deployment, allowing you to tailor your own costs, your own infrastructure, model-size, task specific fine-tuning, security etc. which makes something like vLLM very attractive to individuals, companies and governments.

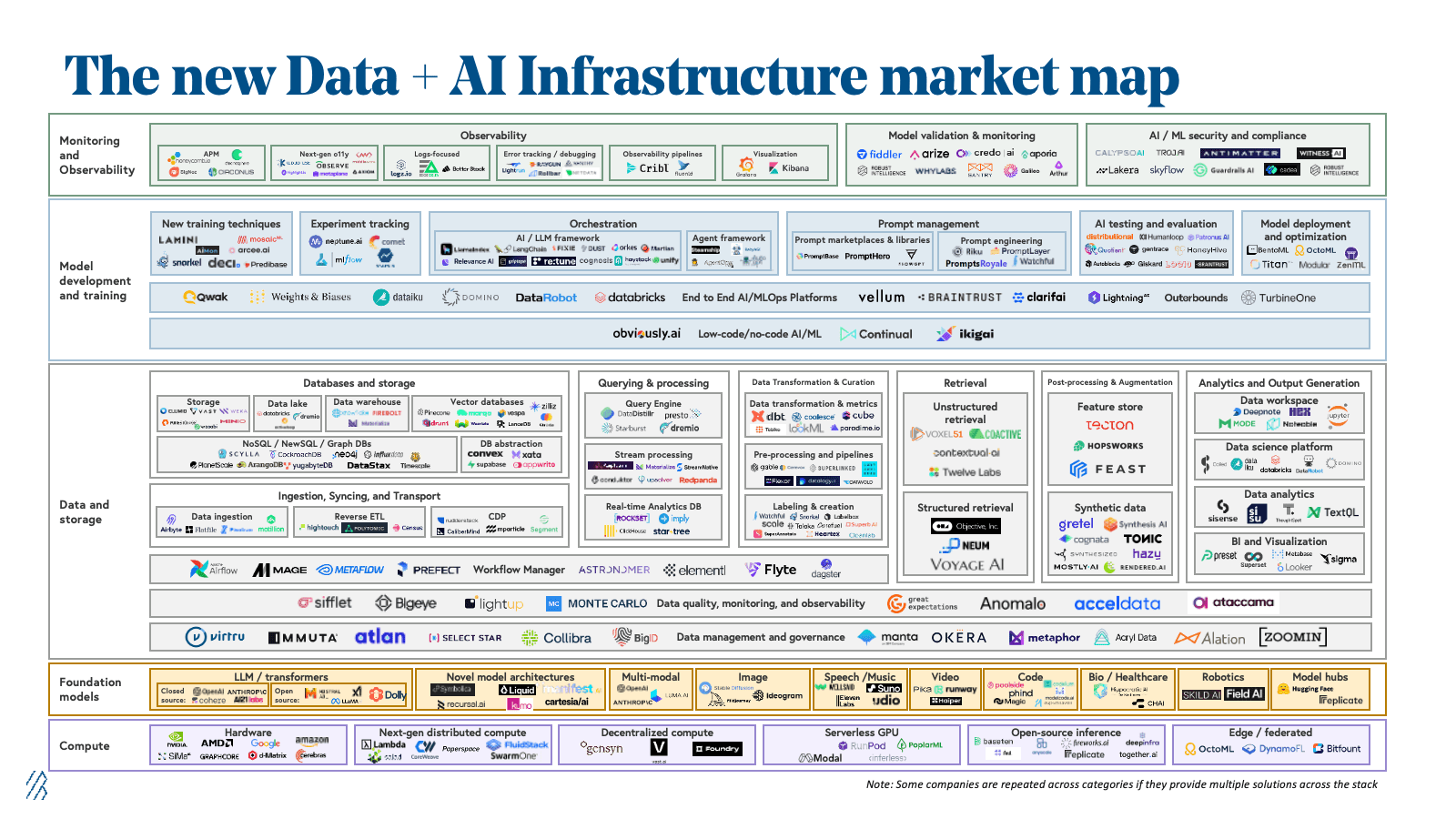

The current AI/ML data landscape is vast:

and this vastness is overwhelming for new developers just coming in its something of a Herculean task to understand exactly what's going on. If you're just a poor developer that just wants to use a model, you also kind of don't care what is going on underneath and you just want something to work.

vLLM

vLLM is a high-performance inference and serving engine for LLMs. It is easy to use, easy to setup along with being very memory-efficient and very scalable. vLLM is also highly compatible with multiple GPU vendors whether that be NVIDIA, AMD or Google TPUs.

vLLM is also vendor agnostic so it doesn't care where it's installed, it can be run on AWS, GCP, Windows, Linux, Docker, K8s, etc. so it makes the software extremely flexible whilst also being user friendly and you can deploy models locally with very little hassle.

I've personally been using it over tools like Ollama for local setup because firstly, it is a production ready tool that is designed for high-concurrency and high-throughput which makes it relevant in production and it is also just as easy to setup so I don't see the downside.

There is also plently of clear documentation on the vLLM docs website which makes the whole experience so much more enjoyable to use.

For me, I've been using vLLM to run a local LLM on my Linux home server running on my AMD GPU in a lightweight K8s environment.

Heading 1

Heading 2

Heading 3

Heading 4

Heading 5

Heading 6

Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt ut labore et dolore magna aliqua. Ut enim ad minim veniam, quis nostrud exercitation ullamco laboris nisi ut aliquip ex ea commodo consequat. Duis aute irure dolor in reprehenderit in voluptate velit esse cillum dolore eu fugiat nulla pariatur.

Block quote

Ordered list

- Item 1

- Item 2

- Item 3

Unordered list

- Item A

- Item B

- Item C

Bold text

Emphasis

Superscript

Subscript

asdsa